Deep Dive on Detection Covering the Prevention Gap

As we discussed earlier in the blog series, preventative and detective controls are incentivized to reduce the false positives and provide high-fidelity responses. More specifically these tools utilize confusion matrices to focus on accuracy and precision. The optimization on accuracy does not eliminate misclassifications. “Anton on Security” discusses many of the issues in detection engineering his blog series here.

There are many factors that impact the accuracy of a detection (or prevention response), including:

Behavior patterns, such as: normal IT functions or application operating as intended

Contextual patterns: normal network traffic for a DMZ but not for an internal system

Data or input validation: a data query allowed but not intended

The “People Effect”: users find unintended ways to use tools or applications

Unknown/Unknown: The application or security tool enters a state that was not identified by the developer

In all of these cases there is some uncertainty or lack of data for a security tool to act with a high level of accuracy and precision. We can look to the scientific world for insight on how to address these issues.

Multi-Classification Confusion Matrix: The first approach is to utilize a Multi-Class Classification matrix for solving the detection problem. The mathematics of a multi-classification solution is explained well here V7Labs confusion Matrix guide. What does this mean in security detections… did we not classify the problem with enough labels or inputs? Maybe we have oversimplified the detection and need to consider more inputs or states.

Addition of Contextualization: If more states or inputs are needed we will likely need more data. Several SIEM vendors have appeared on the market promising to provide more contextualization. The approach here is to stitch together a series of events or related events from disparate telemetry sources. For example: An odd API query and the authentication token of the user that submitted that request.

Behavioral Analysis: In some cases the observed activity may or may not be part of a pattern of behavior. That means that a temporal element needs to be added as an input to the state. Many UEBA products carve out solutions in this space: Has user X ever logged into application Y at time T from location L?

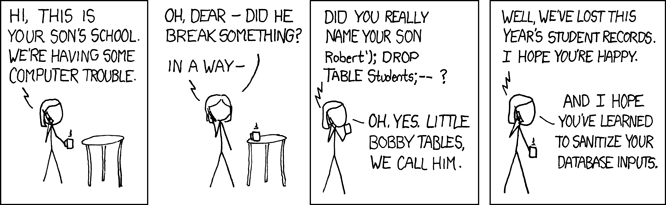

Input Validation: Application inputs are regularly tested for the expected behavior or verification of the input. It is much harder to test for the validation of an input, that is something that was intended to be provided and responded to. A simple example of this is the query language escape “Robert’); DROP TABLES; –?” With the abundance of application integrations and growing presence of APIs this problem is exploding.

Dead-end State: IN some cases, applications or security tools receive input and enter a state that is unexpected. In many cases that is where error handling can play a role. Window Error Reporting (WER) is an example where the WER event can provide context or input of an unexpected threat.

Moving from theoretical to application, we need ways to identify these misclassifications: where are the security controls or tools working with high-precision and accuracy and where is there a chance for misclassification? Again we go back to threat modeling by using MITRE ATT&CK and MITRE D3FEND, we can identify attack scenarios, enumerate the security control responses, and look for places where uncertainty, lack of input, or expanded states (in Multi-Classification conduction Matrices) exist. Here are some issues to look for in this process: high-volume irregular traffic/events, many “use cases” in the event, limited information provided in the telemetry, or overlap with approved behavior (“Live-off-the-Land”). Detection engineering should focus on these gaps that cause misclassification by the security tools as part of their development. By doing so we can avoid overlapping preventions and detections covered by the existing tooling.